So I have this older Dell laser printer, a B1160w. It was released back in 2012, but it is a totally fine home printer for when I occasionally need to print something and it still works great after all these years, so I see no compelling reason to buy a new one.

But there’s a problem: macOS support. Namely, no drivers have been released for macOS since 2017. Starting with Catalina, Apple started requiring code signing for executables, and the official Dell driver has an executable in it that refuses to execute because it isn’t signed. And despite my best efforts, short of turning off Gatekeeper entirely, I was not able to get it to work.

But the printer itself is fine; there is absolutely no reason to create additional electronic waste purely for software reasons. But thanks to open-source software, we have another options: CUPS.

Configuring the CUPS and the Printer

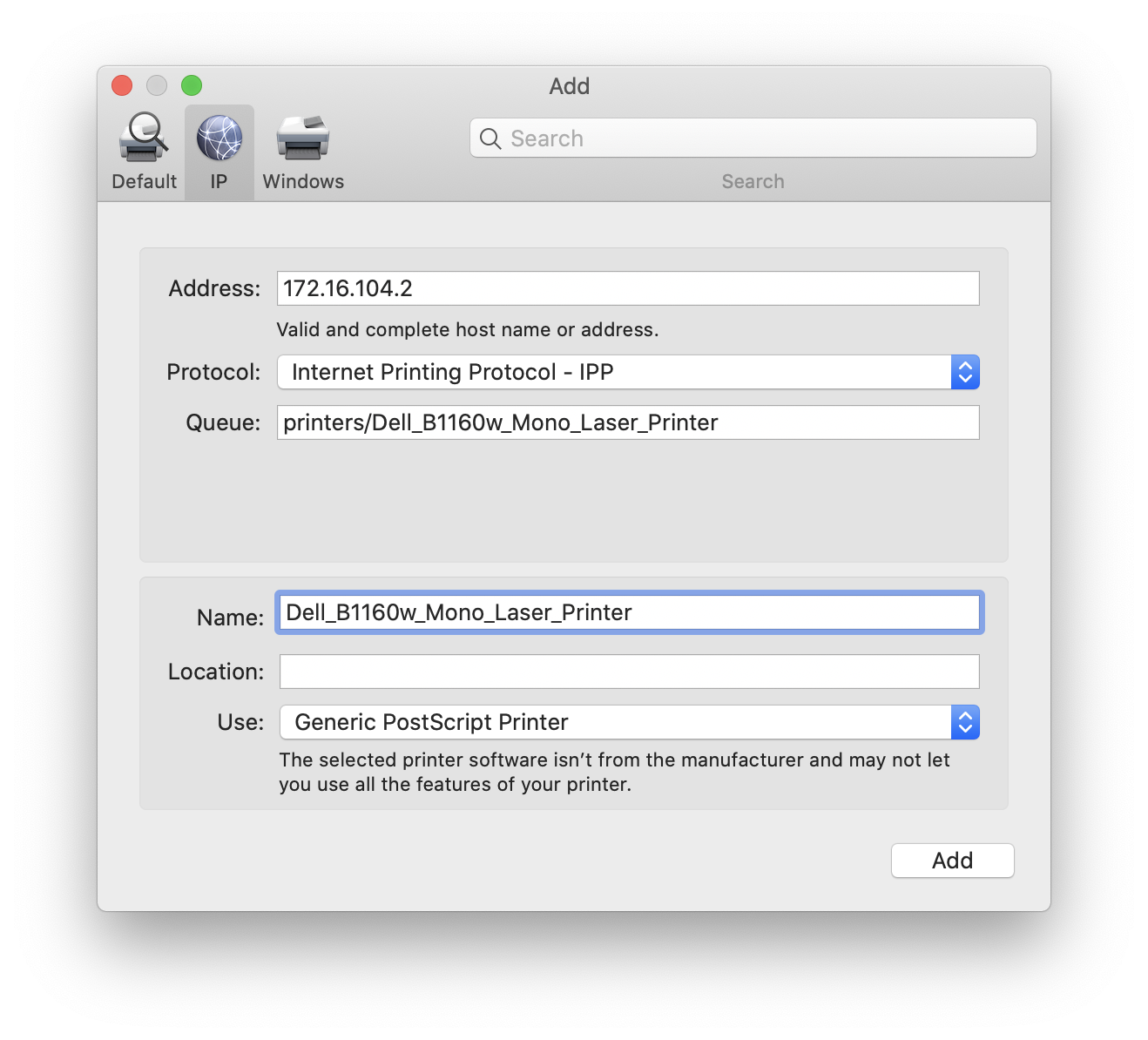

Being that I am moving a lot of things to Docker, I decided to run a CUPS Docker image on one of my servers. Configuring the Dell printer was very straightforward, as the Linux driver is still available. Setting it up in macOS was a bit of a challenge mostly because the GUI for adding network CUPS printers is very obtuse (especially when you consider that macOS is using CUPS beneath the GUI). Basically the settings for a CUPS printer are as follows:

- IP Printer (tab at top)

- Address: the IP address of your CUPS server

- Protocol: IPP

- Queue: “printers/” + the printer name in CUPS